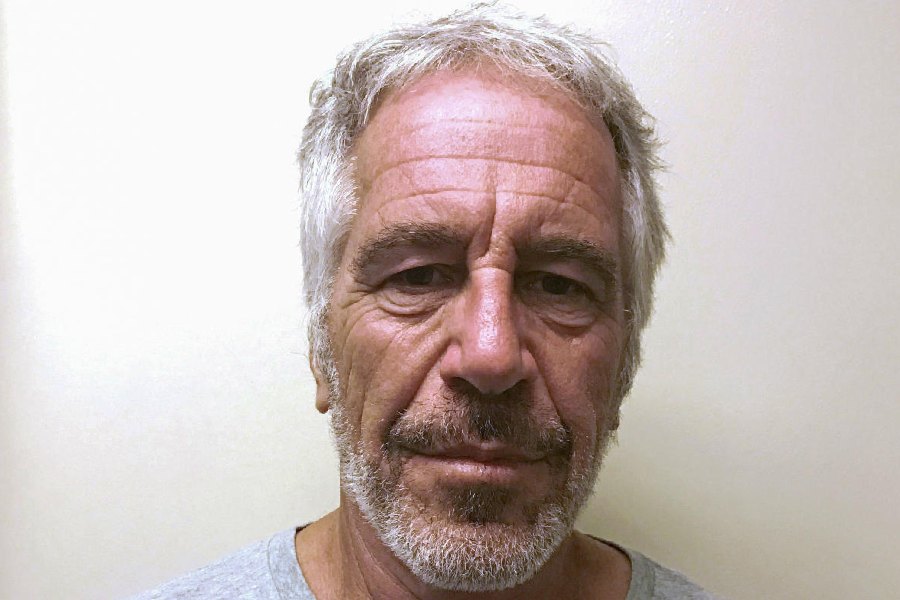

A child psychiatrist who altered a first-day-of-school photo he saw on Facebook to make a group of girls appear nude. A US Army soldier accused of creating images depicting children he knew being sexually abused. A software engineer charged with generating hyper-realistic sexually explicit images of children.

Law enforcement agencies across the US are cracking down on a troubling spread of child sexual abuse imagery created through artificial intelligence technology — from manipulated photos of real children to graphic depictions of computer-generated kids.

Justice Department officials say they're aggressively going after offenders who exploit AI tools, while states are racing to ensure people generating “deepfakes” and other harmful imagery of kids can be prosecuted under their laws.

“We've got to signal early and often that it is a crime, that it will be investigated and prosecuted when the evidence supports it,” Steven Grocki, who leads the Justice Department's Child Exploitation and Obscenity Section, said in an interview with The Associated Press. “And if you're sitting there thinking otherwise, you fundamentally are wrong. And it's only a matter of time before somebody holds you accountable.”

The Justice Department says existing federal laws clearly apply to such content, and recently brought what's believed to be the first federal case involving purely AI-generated imagery — meaning the children depicted are not real but virtual. In another case, federal authorities in August arrested a U.S. soldier stationed in Alaska accused of running innocent pictures of real children he knew through an AI chatbot to make the images sexually explicit.

Trying to catch up to technology

The prosecutions come as child advocates are urgently working to curb the misuse of technology to prevent a flood of disturbing images officials fear could make it harder to rescue real victims. Law enforcement officials worry investigators will waste time and resources trying to identify and track down exploited children who don't really exist.

Lawmakers, meanwhile, are passing a flurry of legislation to ensure local prosecutors can bring charges under state laws for AI-generated “deepfakes” and other sexually explicit images of kids. Governors in more than a dozen states have signed laws this year cracking down on digitally created or altered child sexual abuse imagery, according to a review by The National Center for Missing & Exploited Children.

“We're playing catch-up as law enforcement to a technology that, frankly, is moving far faster than we are," said Ventura County, California District Attorney Erik Nasarenko.

Nasarenko pushed legislation signed last month by Gov. Gavin Newsom which makes clear that AI-generated child sexual abuse material is illegal under California law. Nasarenko said his office could not prosecute eight cases involving AI-generated content between last December and mid-September because California's law had required prosecutors to prove the imagery depicted a real child.

AI-generated child sexual abuse images can be used to groom children, law enforcement officials say. And even if they aren't physically abused, kids can be deeply impacted when their image is morphed to appear sexually explicit.