The largest computer chips these days would usually fit in the palm of your hand. Some could rest on the tip of your finger. Conventional wisdom says anything bigger would be a problem. Now a Silicon Valley startup, Cerebras, is challenging that notion. Recently, the company unveiled what it claims is the largest computer chip ever built. As big as a dinner plate — about 100 times the size of a typical chip — it would barely fit in your lap.

The engineers behind the chip believe it can be used in giant data centres and help accelerate the progress of artificial intelligence in everything from self-driving cars to talking digital assistants such as Amazon’s Alexa.

Many companies are building new chips for AI, including traditional chipmakers like Intel and Qualcomm and other startups in the US, Britain and China. Some experts believe these chips will play a key role in the race to create artificial intelligence, potentially shifting the balance of power among tech companies and even nations. They could feed the creation of commercial products and government technologies, including surveillance systems and autonomous weapons.

AI systems operate with many chips working together. The trouble is that moving big chunks of data between chips can be slow, and can limit how quickly chips analyse that information. “Connecting all these chips together actually slows them down — and consumes a lot of energy,” said Subramanian Iyer, professor at the University of California, Los Angeles, US, who specialises in chip design for artificial intelligence.

Hardware makers are exploring many different options. Some are trying to broaden the pipes that run between chips. Cerebras, a three-year-old company backed by more than $200 million in funding, has taken a novel approach. The idea is to keep all the data on a giant chip so a system can operate faster.

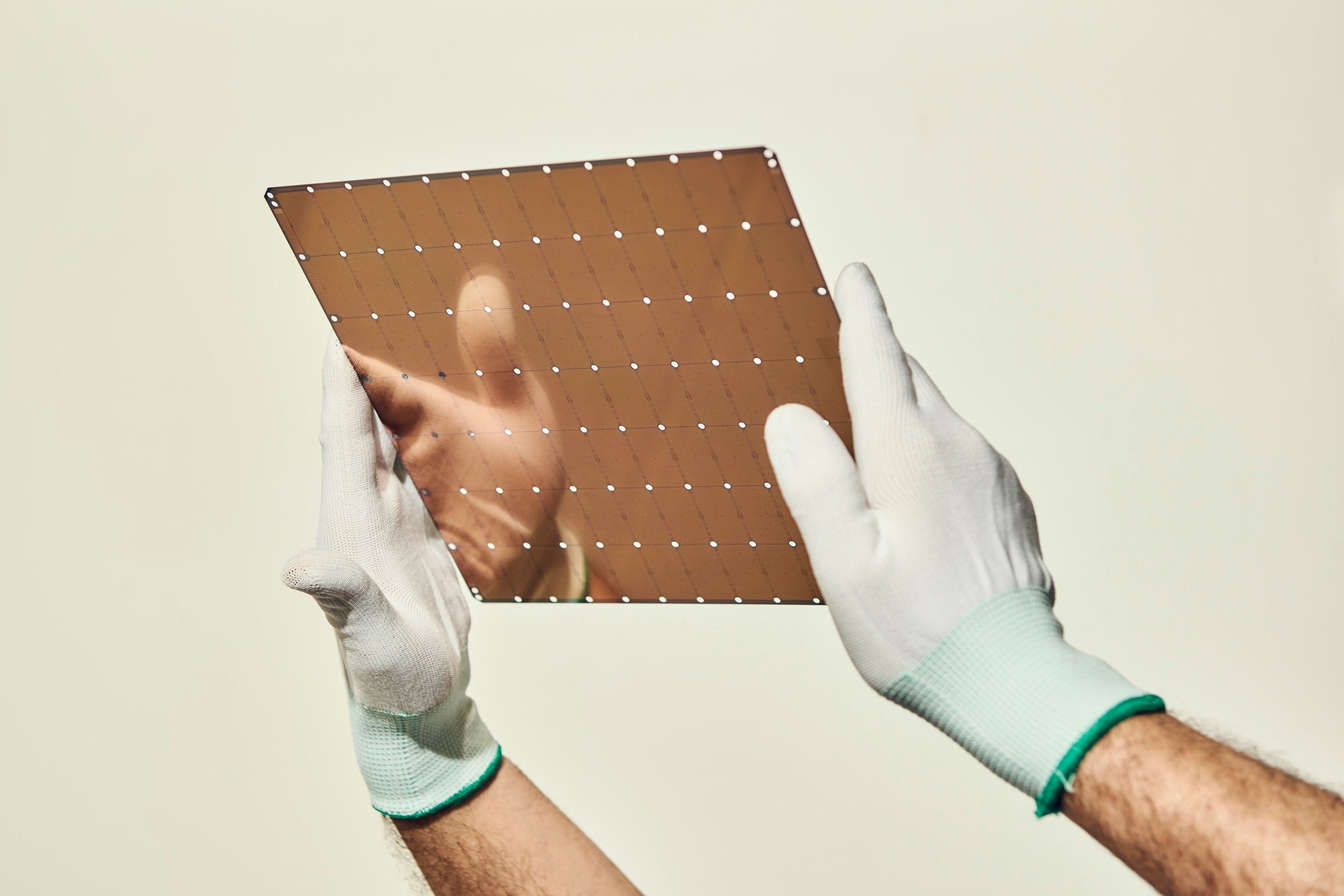

Computer chips are typically built onto round silicon wafers that are about 12 inches in diameter. Each wafer usually contains about 100 chips. Many of these chips, when removed from the wafer, are thrown out and never used. Etching circuits into the silicon is such a complex process, manufacturers cannot eliminate defects. Some circuits just don’t work. This is part of the reason chipmakers keep their chips small — less room for error, so they do not have to throw as many away.

Cerebras said it had built a chip the size of an entire wafer.

Others have tried this, most notably a startup called Trilogy, founded in 1980 by the IBM chip engineer, Gene Amdahl. Though it was backed by over $230 million in funding, Trilogy ultimately decided the task was too difficult and it folded after five years.

Nearly 35 years later, Cerebras plans to start shipping hardware to a small number of customers next month. The chip could train AI systems between 100 and 1,000 times faster than existing hardware. Cerebras engineers have divided their giant chip into smaller sections, or cores, with the understanding that some cores will not work. The chip is designed to route information around these defective areas. The price will depend on how efficiently Cerebras and its manufacturing partner, the Taiwan-based company TSMC, can build the chip.

The process is a “lot more labour intensive,” said Brad Paulsen, a senior vice-president with TSMC. A chip this large consumes large amounts of power, which means that keeping it cool will be difficult — and expensive. In other words, building the chip is only part of the task.

Cerebras plans to sell the chip as part of a much larger machine that includes elaborate equipment for cooling the silicon with chilled liquid. It is nothing like what the big tech companies and government agencies are used to working with.

“It is not that people have not been able to build this kind of a chip,” said Rakesh Kumar, a professor at the University of Illinois, US, who is also exploring large chips for AI. “The problem is that they have not been able to build one that is commercially feasible.”