A new artificial intelligence system can non-invasively translate the brain activity of a person listening to or imagining a story or watching a silent video into a continuous stream of text, scientists said on Monday.

A study by a four-member research group, which included an Indian graduate student, at the University of Texas Austin has shown that the system can generate intelligible word sequences from brain activity measured through functional magnetic resonance imaging (f-MRI) scans.

The technology might someday help people who are conscious but unable to speak because of stroke or other health disorders, the researchers have said.

The AI system that decodes brain activity relies on computational technology similar to the ones that power OpenAI’s ChatGPT, an AI system that interacts in a conversational way.

“The goal of language decoding is to take recordings of a user’s brain activity and predict the words the user is hearing, or saying or imagining,” said Jerry Tang, a doctoral student in computer science who led the study. “This is proof-of-concept that language can be decoded from non-invasive recordings.”

The study by Tang and his colleagues is the first to decode continuous language, meaning more than single words or sentences, from non-invasive brain recordings collected through f-MRI. Other language decoding systems currently in development require surgical implants.

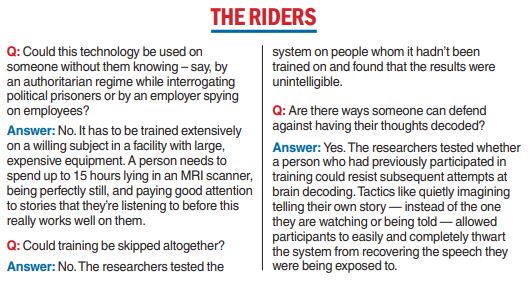

The system has to be tailored to specific users. A person needs to spend up to 15 hours inside an MRI scanner, lying perfectly still and paying good attention to stories they’re listening to, before this really works well on them, said Alexander Huth, assistant professor of neuroscience and computational science at the university.

Through this training, the scientists build a model to predict how a user’s brain activity will respond to other stories.

The AI system’s output is not a word-for-word transcript but is designed to capture the gist of what is being said or thought, albeit with errors. For instance, a participant hearing a speaker say “I don’t have my driver’s licence yet” had their thoughts translated as “She has not even started to learn to drive yet”.

The study appeared in the journal Nature Neuroscience on Monday. The other co-authors are Amanda LaBel, a former researcher in Huth’s lab, and Shailee Jain, a graduate student at the university who had completed a BTech at the National Institute of Technology, Surathkal, before moving to the US.

In another experiment, a user heard: “I got up from the air mattress and pressed my face against the glass of the bedroom window expecting to see eyes staring back but instead finding only darkness.”

The decoder translation: “I just continued to walk up to the window and open the glass I stood on my toes and peered out I didn’t see anything and looked up again I saw nothing.”

For a non-invasive decoding system, Huth said, this is a “real leap forward compared to what’s been done before, which is typically single words or short sentences”.

This system decodes continuous language for “extended periods of time with complicated ideas”, he said.

The system currently requires an MRI machine and is therefore impractical for routine use. But, Huth said, the team is exploring whether the system would also work with portable brain imaging systems such as one called functional near-infrared spectroscopy (fNIRS).

Scientists say the technology currently requires the extensive training of, and cooperation from, the users whose thoughts are to be decoded and is easy to “sabotage”.

But, they say, societies must prepare themselves by regulating such technologies in anticipation of future advanced versions that could overcome such obstacles to mind-reading.

“(For now) we need cooperation to train and run the decoder. We can’t train it on one and run it on another person,” Tang said.

It is also possible to sabotage the decoder – for instance, if a user imagines another story while being told a particular story.