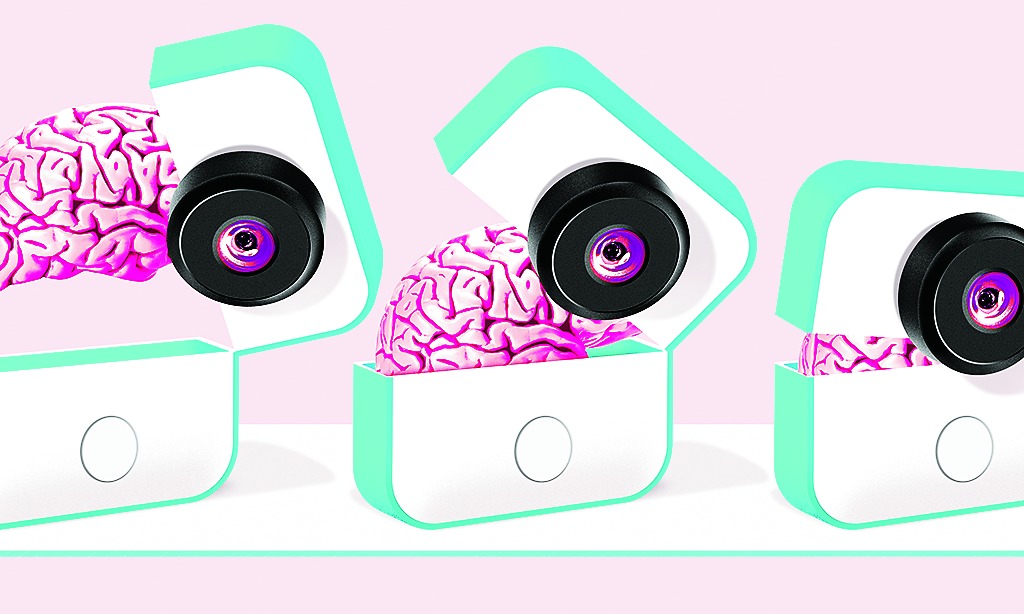

Something strange, scary and sublime is happening to cameras, and it's going to complicate everything you knew about pictures. Cameras are getting brains.

Until the past few years, all cameras - whether smartphones or point-and-shoots or CCTV surveillance - were like eyes. They captured anything you put in front of them, but didn't understand a whit about what they were seeing.

But all this is changing. There's a new generation of cameras that understand what they see. They're eyes connected to brains, machines that no longer just see what you put in front of them, but can act on it - creating intriguing and sometimes eerie possibilities.

At first, these cameras will promise to let us take better pictures, to capture moments that might not have been possible with every dumb camera that came before. That's the pitch Google is making with Clips. It uses so-called machine learning to automatically take snapshots of people, pets and other things it finds interesting.

Others are using artificial intelligence to make cameras more useful. You've heard how Apple's newest iPhone uses face recognition to unlock your phone. A startup called Lighthouse AI wants to do something similar for your home, using a security camera that adds a layer of visual intelligence to the images it sees. When you mount its camera in your entryway, it can constantly analyse the scene, alerting you if your dog walker doesn't show up, or if your kids aren't home by a certain time after school.

Digital cameras brought about a revolution in photography, but until now, it was only one of scale: Thanks to microchips, cameras got smaller and cheaper, and we began carrying them everywhere.

Now, AI will create a revolution in how cameras work, too. Smart cameras will let you analyse pictures with prosecutorial precision, raising the spectre of a new kind of surveillance - not just by the government but by everyone around you, even your loved ones at home.

Take Google's Clips, which I've used for the past week. The camera is the size of a tin of mints, and has no screen. On its front, there's a lens and a button. The button takes a picture, but it's there only if you really need it.

Instead, most of the time, you just rely on the camera's intuition, which has been trained to recognise facial expressions, lighting, framing and other hallmarks of nice photos. It also recognises familiar faces - the people you're with more often are those it deems most interesting to photograph.

Clips watches the scene, and when it sees something that looks like a compelling shot, it captures a 15-second burst picture (something like a short animated GIF or Live Photo on your iPhone).

I took a trip with my family to Disneyland last week, and over two highly photographable days, I barely took a photo. Instead, this tiny device automatically did the work, capturing a couple hundred short clips of our vacation.

Lighthouse, which I've also used for a few weeks, is meant to be an upgrade over the Internet-connected home security cameras that have become popular. Its special trick is a camera system that can sense 3D space and learn to recognise faces - intelligence meant to avoid false alarms. It also has a nifty natural-language interface, so you can ask it straightforward questions. "What did the kids do when I was gone?" will show you clips of your kids when you were gone. It was mostly accurate in differentiating people in my house, but was tripped up into thinking I had an intruder by a mylar balloon floating around my living room.

Both Lighthouse and Clips are guides to the future. Tomorrow, all cameras will have their capabilities. And they won't just watch you - they'll understand, too.